Benchmarking ArcticDB

Georgi Petrov

Jul 31, 2024

ArcticDB thrives on continuous improvement and rigorously tested updates. This article looks at the benchmarking process we’ve adopted, a process that is important for ensuring each change not only integrates seamlessly but also improves the overall performance of ArcticDB. We’ll look into our benchmarking strategy, the specific requirements driving our choices, and discuss the role these benchmarks play in the development and maintenance of ArcticDB.

What is ASV, and How Do We Use It?

Air Speed Velocity (ASV) is an indispensable benchmarking tool used widely among Python developers to maintain high-performance standards over time. It’s used in high-profile projects like Numpy, Arrow, and SciPy. ASV automates the tedious process of benchmarking different library versions, allowing developers to compare performance metrics across various updates and releases systematically.At ArcticDB, we run our ASV benchmarks in 2 ways:

Nightly Runs on Main Branch: ASV runs benchmarks on the main branch each night, updating performance graphs that provide ongoing insights into our library’s efficiency and speed.

On-Demand Benchmarking for Pull Requests: ASV proactively benchmarks any changes pushed to branches with open PRs against the main branch. If a performance regression exceeding 15% is detected, it triggers a benchmark failure. This proactive mechanism ensures that performance dips are caught and addressed swiftly, which helps us to strike a balance between continuous improvement and reliability

Additionally, ASV allows for the creation and export of performance graphs, which we openly host on GitHub pages for ArcticDB. This set up enables developers and users to get a sense of any performance changes visually and in real-time.

Adding and Managing Benchmarks in ArcticDB

Structure of Benchmarking Code

In ArcticDB, we have categorized the benchmarks based on the type of functionality they assess, ensuring that the process evaluates each aspect of the database and its functionality. Here’s how these benchmarks are structured:

Basic Functions: This category benchmarks fundamental operations such as read, write, append, and update. We benchmark these operations against local storage to ensure efficiency and reliability in everyday database interactions

List Functions: Benchmarks in this group focus on the performance of listing operations, crucial for database management tasks such as retrieving symbols or browsing different versions stored within the database

Local Query Builder (QB): This set helps in assessing the speed and resource management of query builder operations, in general

Persistent Query Builder: Designed to evaluate query builders against persistent storage solutions like AWS S3, this category is vital for testing the scalability and performance of ArcticDB when handling larger datasets, which are typical in production environments

By maintaining a clear and comprehensive benchmarking strategy, ArcticDB ensures that each component performs optimally under varied conditions, maintaining the database’s integrity and performance.

Importance of Choosing How to Benchmark ArcticDB

ArcticDB stands out for its ease of use and high performance, but does need to deal with a range of performance in storage, from low-latency memory-mapped files through to high-latency cloud storage like like AWS S3. Cloud operations involve additional costs and so benchmarking can be expensive. We must balance our benchmarking strategies between local environments and cloud-based storage.To optimize our approach, we categorize benchmarks based on the primary bottlenecks affecting different operations:

CPU/Memory Intensive Benchmarks: These are typically run locally using LMDB. This setup allows us to test the processing capabilities and memory usage of ArcticDB without incurring the costs associated with cloud storage.

I/O Intensive Benchmarks: These benchmarks focus on operations involving significant input/output operations, which are crucial when using networked storage solutions like AWS S3. These tests ensure that ArcticDB can handle high data throughput efficiently, which is a typical scenario in production environments.

This split allows us to maintain a balance between performance testing and cost management. By selectively choosing when and how to deploy these benchmarks, we can make sure that ArcticDB remains both fast and economical for our users.

The effects of using ASV

The benchmarks developed using ASV help us ensure that changes to ArcticDB do not degrade its performance. Here are a couple of examples:

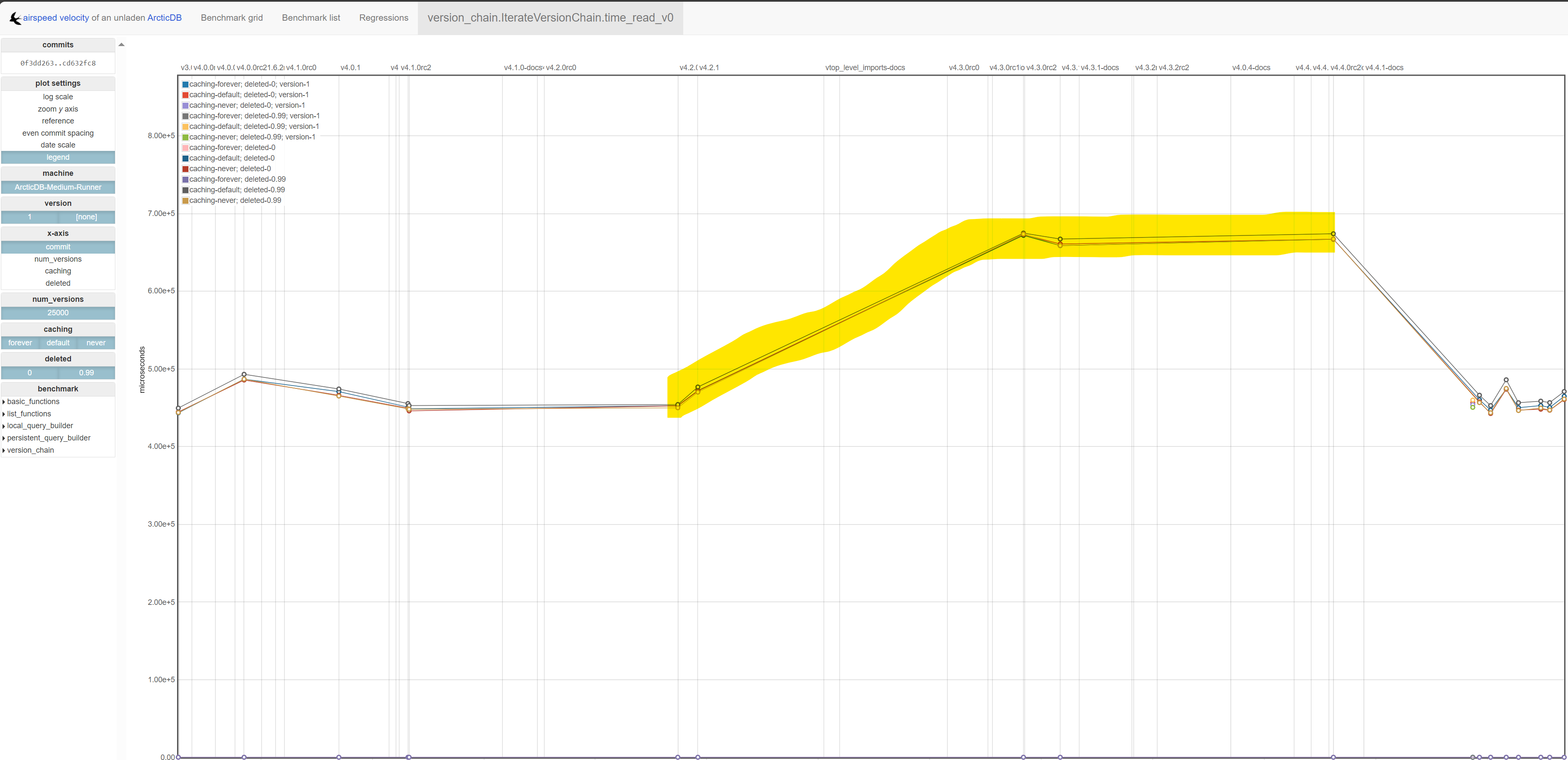

1. Identifying and Resolving Regressions: The benchmarks run on every pull-request and this helps us in preventing regressions from entering the main branch. But as we add more benchmarks over time, we can also identify regressions that were introduced in the past. In one instance, a significant regression was detected in one of our core features — iterating over the ‘version-chain’ and reading older versions. The ASV benchmarks helped highlight this issue, allowing us to address it promptly. This helped us improve the user experience.

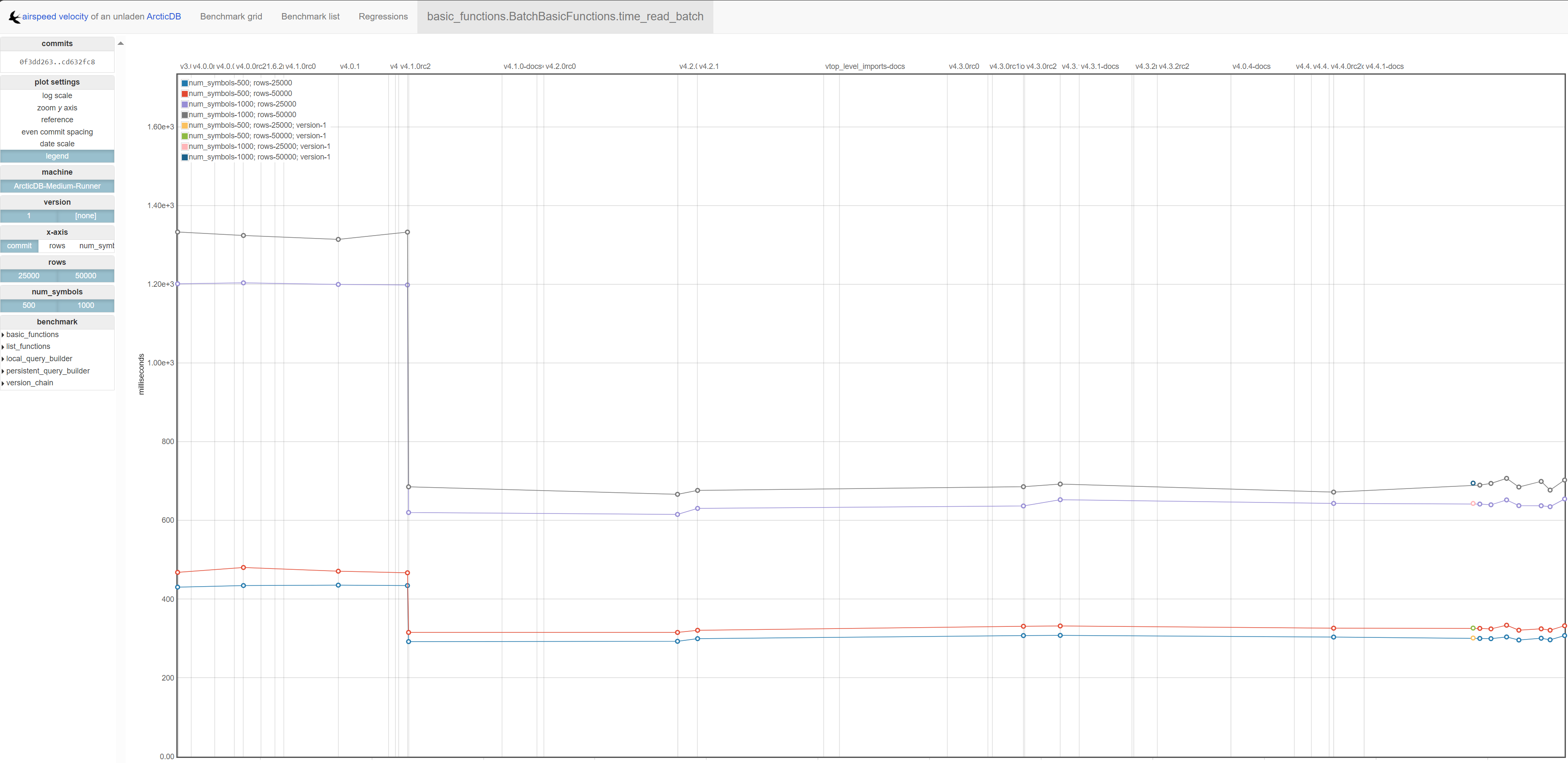

2. Validating Performance Improvements: In another case, we implemented a fix intended to improve performance. Using our ASV benchmarks, we checked the change improved the method’s efficiency. This not only confirmed the fix worked but also demonstrated the benchmarks’ value.

Conclusion

The careful and strategic benchmarking of ArcticDB is not just about maintaining its performance — it’s about understanding and optimizing how the database interacts with different storage environments, particularly cloud-based solutions. By implementing a balanced benchmarking strategy that differentiates between CPU/memory-intensive and I/O-intensive operations, we ensure that ArcticDB remains efficient and cost-effective.

This approach allows us to continuously refine our technology, making changes without degrading performance. ArcticDB demonstrates how performance tracking and optimization can substantially improve software development.

Ultimately, the insights gained from the benchmarking guide our development efforts.

Aug 19, 2025

Unlocking the Winning Formula: Sports Analytics with Python and ArcticDB

Discover how Bill James' famous Pythagorean Won-Loss formula predicts team success. This deep dive uses ArcticDB to uncover key insights for winning.

Mar 21, 2025

Our Man Group case study: Generating alpha and managing risk at petabyte scale using Python.

This blog explores how ArcticDB is transforming quantitative research by overcoming the limitations of traditional database systems.

Elle Palmer